Page 71 - JSOM Summer 2024

P. 71

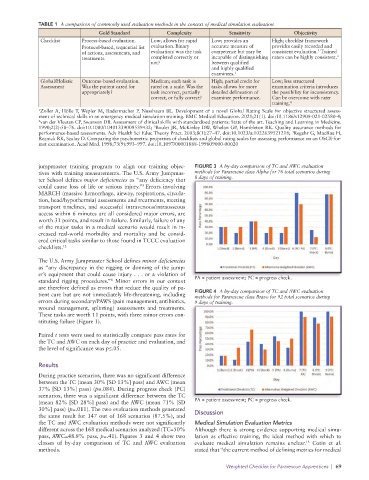

TABLE 1 A comparison of commonly used evaluation methods in the context of medical simulation evaluation

Gold Standard Complexity Sensitivity Objectivity

Checklist Process-based evaluation. Low; allows for rapid Low; provides an High; checklist framework

Protocol-based, sequential list evaluation. Binary accurate measure of provides easily recorded and

2

of actions, assessments, and evaluation: was the task competence but may be consistent evaluation. Trained

3

treatments. completed correctly or incapable of distinguishing raters can be highly consistent.

not? between qualified

and highly qualified

examinees. 1

Global/Holistic Outcome-based evaluation. Medium; each task is High; partial credit for Low; less structured

Assessment Was the patient cared for rated on a scale. Was the tasks allows for more examination criteria introduces

appropriately? task incorrect, partially detailed delineation of the possibility for inconsistency.

correct, or fully correct? examinee performance. Can be overcome with rater

training. 4

1 Zoller A, Hölle T, Wepler M, Radermacher P, Nussbaum BL. Development of a novel Global Rating Scale for objective structured assess-

ment of technical skills in an emergency medical simulation training. BMC Medical Education. 2021;21(1). doi:10.1186/s12909-021-02580-4;

2 van der Vleuten CP, Swanson DB. Assessment of clinical skills with standardized patients: State of the art. Teaching and Learning in Medicine.

3

1990;2(2):58–76. doi:10.1080/10401339009539432; Boulet JR, McKinley DW, Whelan GP, Hambleton RK. Quality assurance methods for

4

performance-based assessments. Adv Health Sci Educ Theory Pract. 2003;8(1):27–47. doi:10.1023/a:1022639521218; Regehr G, MacRae H,

Reznick RK, Szalay D. Comparing the psychometric properties of checklists and global rating scales for assessing performance on an OSCE-for-

mat examination. Acad Med. 1998;73(9):993–997. doi:10.1097/00001888-199809000-00020

jumpmaster training program to align our training objec- FIGURE 3 A by-day comparison of TC and AWC evaluation

tives with training measurements. The U.S. Army Jumpmas- methods for Pararescue class Alpha for 76 total scenarios during

ter School defines major deficiencies as “any deficiency that 8 days of training.

could cause loss of life or serious injury.” Errors involving

8

MARCH (massive hemorrhage, airway, respirations, circula-

tion, head/hypothermia) assessments and treatments, meeting

transport timelines, and successful intravenous/intraosseous

access within 6 minutes are all considered major errors, are

worth 31 points, and result in failure. Similarly, failure of any

of the major tasks in a medical scenario would result in in-

creased real-world morbidity and mortality and be consid-

ered critical tasks similar to those found in TCCC evaluation

checklists. 12

The U.S. Army Jumpmaster School defines minor deficiencies

as “any discrepancy in the rigging or donning of the jump-

er’s equipment that could cause injury . . . or a violation of

standard rigging procedures.” Minor errors in our context PA = patient assessment; PC = progress check.

8

are therefore defined as errors that reduce the quality of pa-

tient care but are not immediately life-threatening, including FIGURE 4 A by-day comparison of TC and AWC evaluation

methods for Pararescue class Bravo for 92 total scenarios during

errors during secondary/PAWS (pain management, antibiotics, 9 days of training.

wound management, splinting) assessments and treatments.

These tasks are worth 11 points, with three minor errors con-

stituting failure (Figure 1).

Paired t tests were used to statistically compare pass rates for

the TC and AWC on each day of practice and evaluation, and

the level of significance was p≤.05.

Results

During practice scenarios, there was no significant difference

between the TC (mean 30% [SD 13%] pass) and AWC (mean

37% [SD 13%] pass) (p=.084). During progress check (PC)

scenarios, there was a significant difference between the TC

(mean 82% [SD 28%] pass) and the AWC (mean 71% [SD PA = patient assessment; PC = progress check.

30%] pass) (p=.011). The two evaluation methods generated Discussion

the same result for 147 out of 168 scenarios (87.5%), and

the TC and AWC evaluation methods were not significantly Medical Simulation Evaluation Metrics

different across the 168 medical scenarios analyzed (TC=50% Although there is strong evidence supporting medical simu-

pass, AWC=48.8% pass, p=.41). Figures 3 and 4 show two lation as effective training, the ideal method with which to

classes of by-day comparison of TC and AWC evaluation evaluate medical simulation remains unclear. Cotin et al.

13

methods. stated that “the current method of defining metrics for medical

Weighted Checklist for Pararescue Apprentices | 69